Maintaining the search presence for a website depends on multiple technical factors working correctly.

This 20-minute SEO checklist provides a high-level overview of the state of your search presence and an early warning for any developing issues that need attention.

Out of all the SEO tasks, technical SEO is the most straightforward in terms of what needs to be done and how to do it.

A useful approach to managing the workload is to use a core group of technical SEO factors to monitor the site and search presence health weekly.

This list is remarkably applicable for almost any individual or team across a variety of industries.

Of course, there may be additional factors that can be added that are specific to your situation, but these points can form the backbone of a useful weekly checkup.

Is 20 Minutes A Week Enough?

I can already hear the counterarguments from full-time technical SEO pros: “You can’t even scratch the surface in 20 minutes a week.”

I agree.

But the point of this guide is to demonstrate how to monitor your most critical issues from a high level and diagnose where to spend more energy digging in.

Some weeks, a 20-minute checkup may be all you need.

Other weeks, you may find a disastrous canonicalization error and call in the troops for an all-hands-on-deck assault.

If you’re lagging on monitoring your technical SEO, you’re about to get a big efficiency boost by following this weekly workflow.

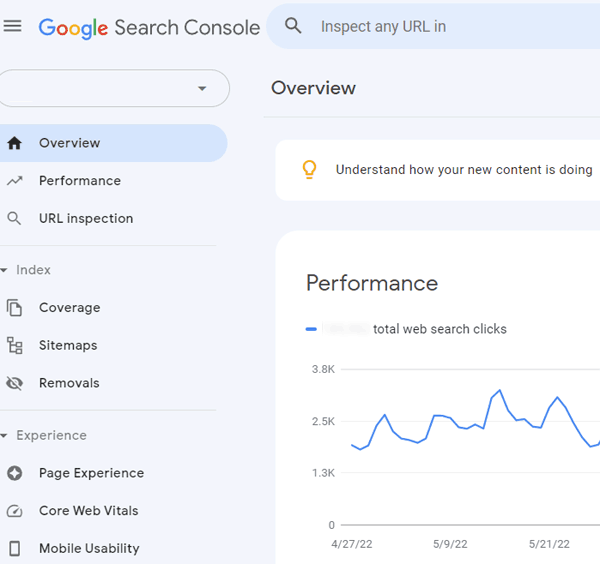

1. Search Console Overview (Minutes 0-10)

There’s no better place to start than popping over to Search Console for a high-level scan of everything.

The data is straight from Google; the dashboard is already built for you, and you already have it set up for your account.

What we’re looking for are glaring errors.

We’re not digging into pages to analyze small keyword movements.

We’re looking for the big kahunas of problems.

Start With The Overview Section:

Review these data points:

- In the Performance summary, are any drastic drops in traffic out of the ordinary? Massive decreases may indicate a sitewide technical SEO problem.

- In the Coverage summary, are there any spikes in “Pages with errors”? If this is your first time checking in a while, you’ll want to dig into historical ones.

- In the Enhancement overviews, look for spikes up and down in features such as AMP, Q&As, Mobile Usability, and more. Are these moving as expected? If you see irregularities, drill down.

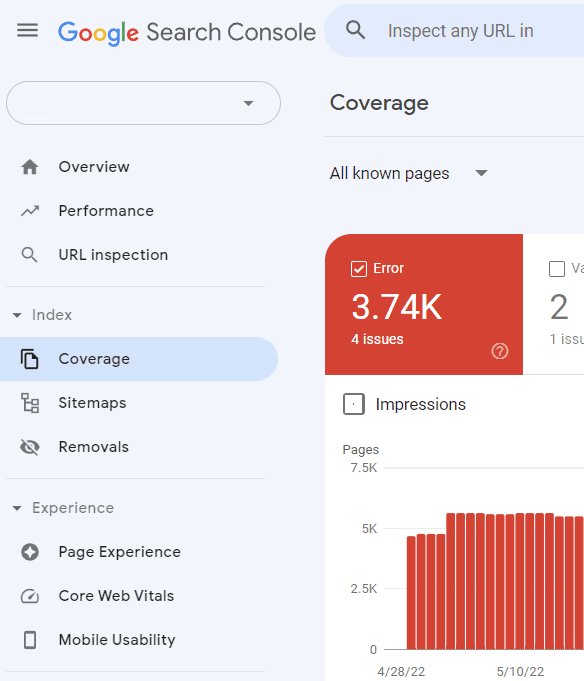

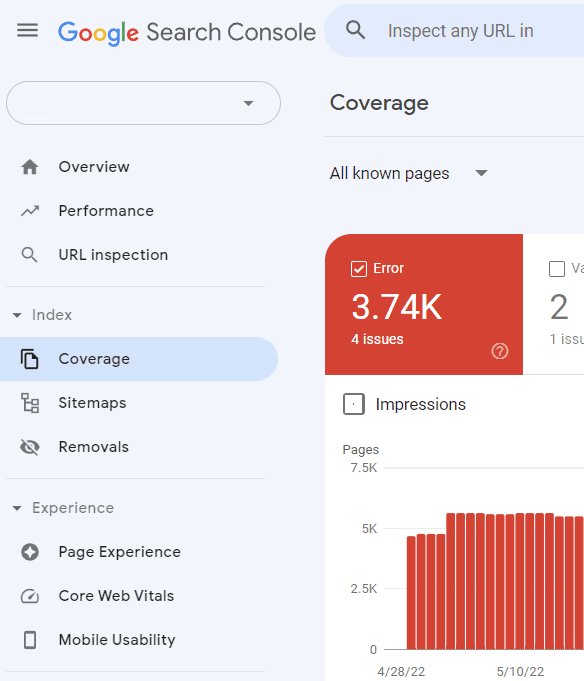

Next, Move On To The Coverage Section:

Screenshot from Google Search Console, July 2022

Screenshot from Google Search Console, July 2022

The Index Coverage section is key to understanding how Google’s indexing and crawling of your site are going.

This is where Google communicates errors related to indexing or crawling.

The biggest thing to look for is the default Error view, and you’ll want to read through the Details section.

Scan line by line and look at the trend column. If anything looks out of the ordinary, you’ll want to dig in more and diagnose.

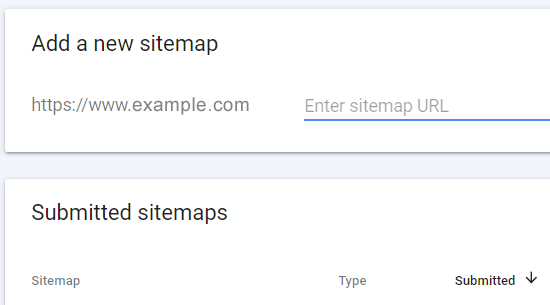

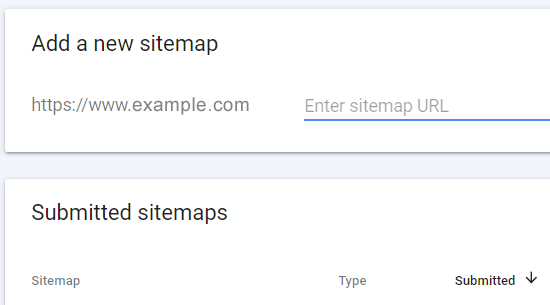

View The Sitemaps Section:

Screenshot from Google Search Console, July 2022

Screenshot from Google Search Console, July 2022

This provides a wealth of information on your sitemaps and their corresponding pages.

It’s especially helpful when you have multiple sitemaps that represent different sections of pages on your site.

You want to look at the Last Read column to ensure it’s been crawled somewhat recently, which varies depending on your site.

Then, you’ll want to check the Status column to see the highlighted errors. Make a note to take action if this has increased since last week in an elevated way.

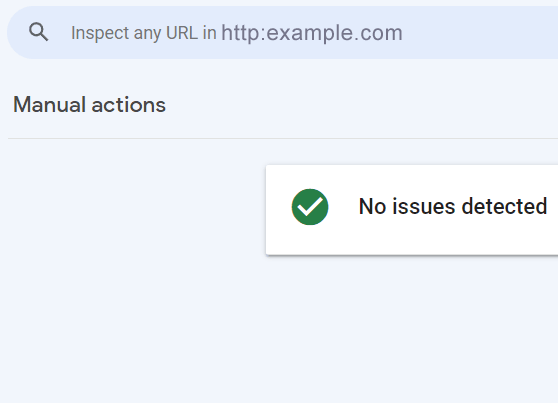

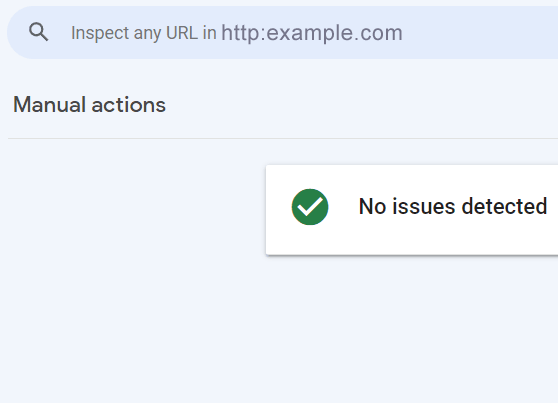

Check For Manual Actions:

Screenshot from Google Search Console, July 2022

Screenshot from Google Search Console, July 2022

This is a big one. If you’re doing everything right, this will rarely, if ever, have any manual actions listed.

But it’s worth checking weekly to give you peace of mind. You want to find it before your CEO does.

Search Console has a wealth of information, and you could spend days digging into each report.

These high-level checks represent the most important summary dashboards to check weekly.

Briefly reviewing each of these sections and making notes can be done in as little as 10 minutes a week. But digging into the issues you find will take a lot more research.

2. Check Robots.txt (Minutes 11-12)

The Robots.txt file is among the most important way to communicate to search engines where you want them to crawl and what pages you don’t want to be crawled.

Super important: The robots.txt file only controls the crawling of but not the indexing of pages.

Some small sites have one or two lines in the file, while massive sites have incredibly complex setups.

Your average site will have just a few lines, and it rarely changes week to week.

Despite the file rarely changing, it’s important to double-check that it’s still there and that nothing unintentional was added to it.

In the worst-case scenario, such as on a website relaunch or a new site update from your development team, the robots.txt file might get changed to “Disallow: /” to block search engines from crawling while the pages are under development on a staging server and then brought over to the live site with the disallow directive intact.

Make sure this is not on the live website:

User-agent: * Disallow: /

But if it’s a normal week, there won’t be any changes, and it should only take a minute.

Every site has a different configuration every week; you’ll want to compare it against your best-practice setup to ensure nothing has changed in error.

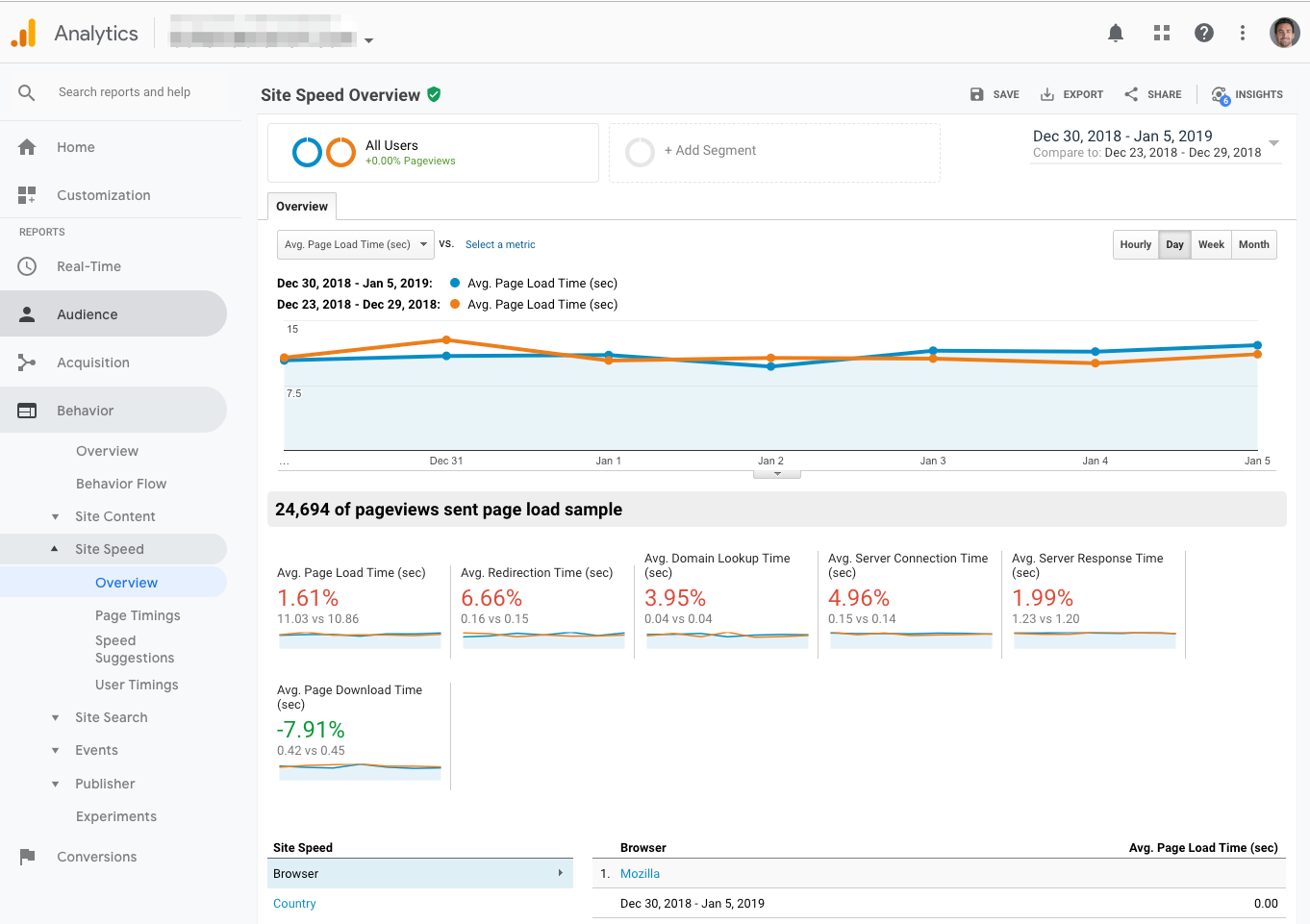

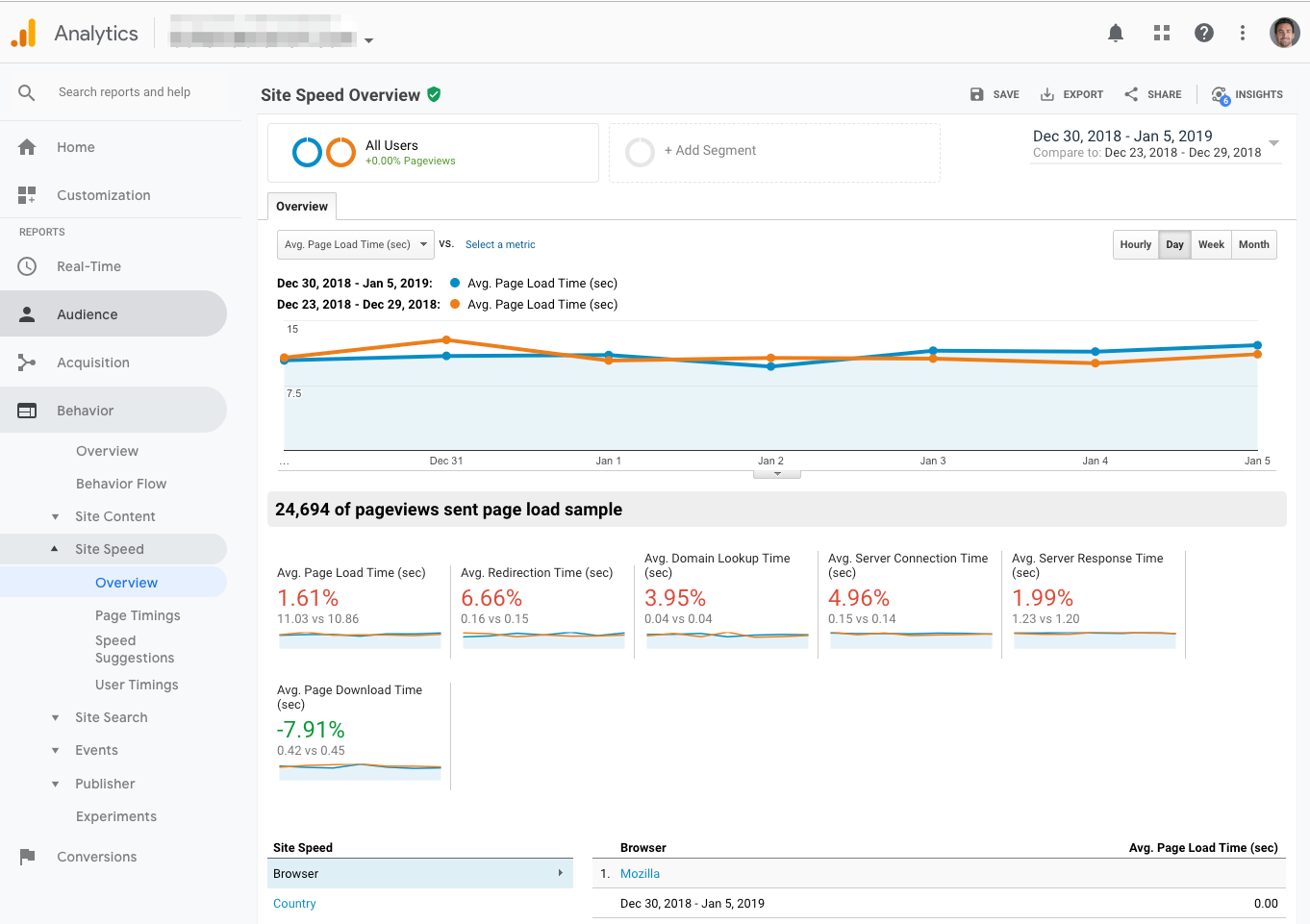

3. Review Page Speed In Google Analytics (Minutes 13-15)

For a high-level look at page speed across your site, we’ll hop over to Google Analytics.

Go to Behavior > Site Speed > Overview

Screenshot from Google Analytics, July 2022

Screenshot from Google Analytics, July 2022

I recommend comparing the past seven days to the previous seven days to get a sense of any big changes.

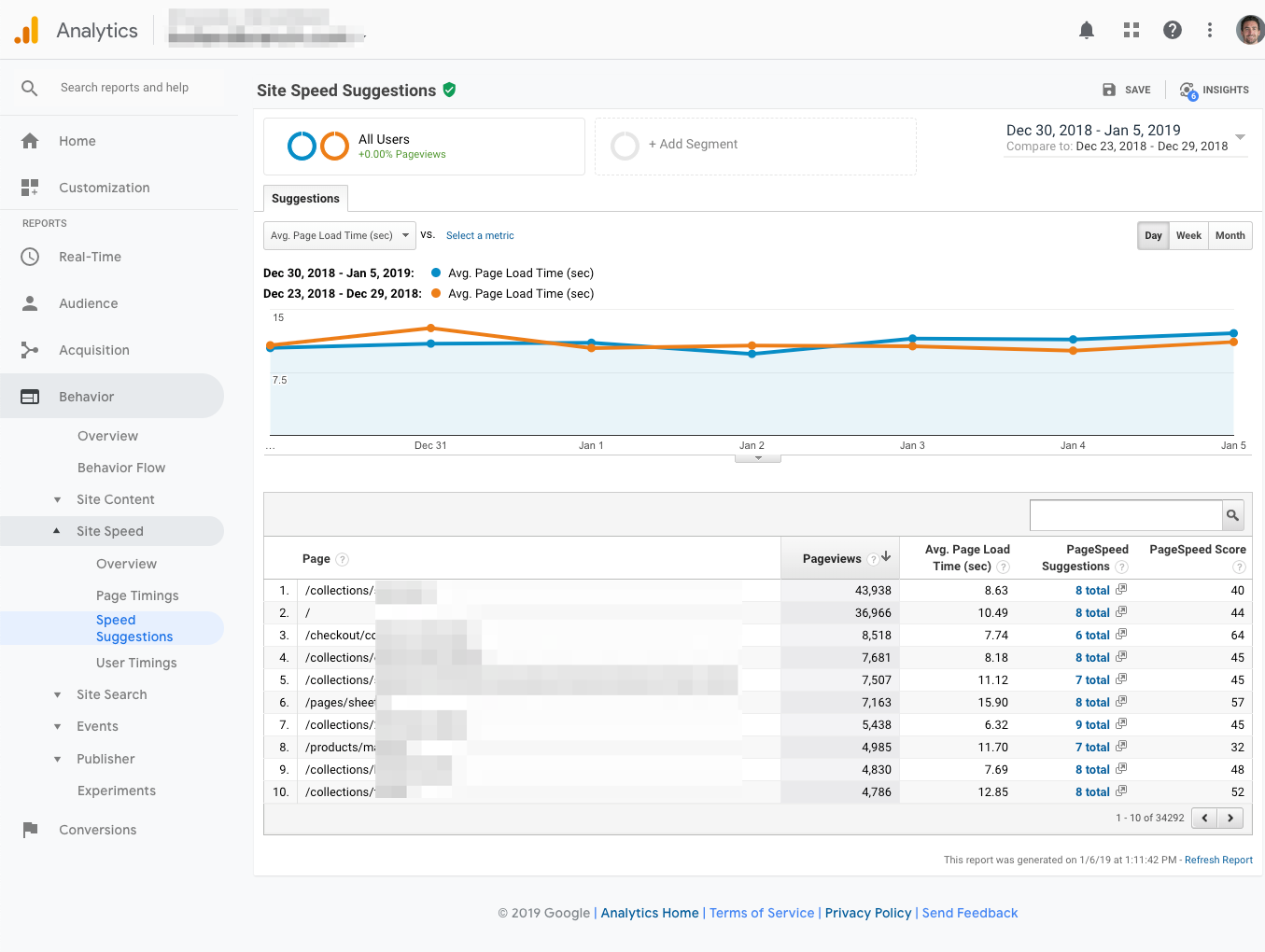

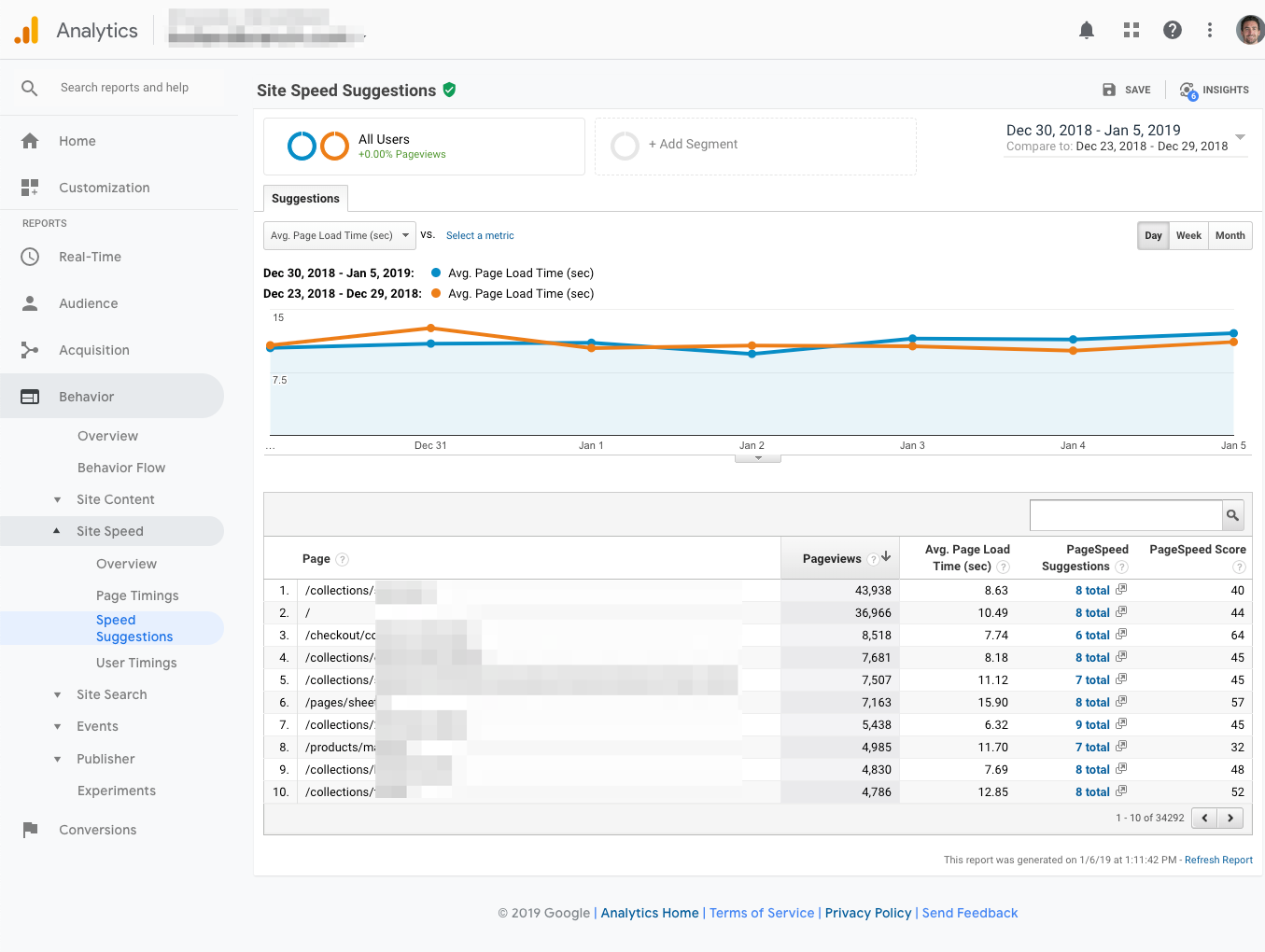

To dig in further, you’ll want to go to Speed Suggestions to get page-by-page timings and suggestions:

Screenshot from Google Analytics, July 2022

Screenshot from Google Analytics, July 2022

The goal is to get a high-level sense of whether anything has gone wrong in the last week.

To take action, you’ll want to test individual pages with a few other tools that get into the nuts and bolts.

Many other tools exist to dig in further and diagnose specific page speed issues.

A useful tool from Google for measuring and diagnosing page speed issues is the Chrome Lighthouse tool which can be accessed through the DevTools built into every Chrome-based browser.

4. Review The Search Results (Minutes 15-18)

There’s nothing better than getting down and dirty in the actual Search Engine Results Pages (SERPs).

Gianluca Fiorelli said it best:

It surprises a lot how many SEOs rarely directly look at the SERPs, but do that only through “the 👀 “ of a tool. Shame! Look at them & youl’ll:

1) see clearly the search intent detected by Google

2) see how to format your content

3) find On SERPS SEO opportunities pic.twitter.com/Wr4OYAcmiG— Gianluca Fiorelli (@gfiorelli1) October 23, 2018

Though tools are useful and time-saving, one shouldn’t neglect to review the actual search results (SERPs) and not only when tools report significant changes.

Just type your keywords into the search engine and check if the tools reported match what you see in the SERPs.

It’s 100% normal that there are slight variations in rankings because search results are dynamic and can change depending on factors such as geography, search history, device, and other personalization-related reasons.

Spot check the SERPs weekly, and you’ll sleep better at night.

5. Visually Check Your Site (Minutes 19-20)

Continuing on from the previous phenomenon of not checking the SERPs, it’s all too common for SEO professionals to default to analysis tools rather than hand-checking the website.

Yes, it’s not as “scalable” to check the website by hand, but it’s necessary to pick up some obvious issues that can get lost or undetected in a tool’s report.

You’ll want to rapidly test a few of your top pages to keep this to two minutes.

Remember, this is spot-checking for big issues that stand out, not a granular review of sentences, grammar, and paragraphs.

Start at the home page and scroll through, looking for anything clearly broken. Click all throughout the site, checking different page types and looking for anything off.

And while you’re at it, take a quick look at the code.

Using Chrome, navigate to:

Developer Tools > View Page Source

Again, this is a great practice to do weekly as a high-level checkup.

You’ll feel much better knowing you’re getting your own eyeballs directly on the thing that’s making you money and not depending on some abstraction via a third-party tool.

Conclusion

The 20-minute technical SEO checkup provides a high-level overview of the overall SEO health of a website and provides an early warning when something is out of place before the problem escalates into a catastrophic failure.

The point is to quickly determine that all of the website vitals signs (such as crawling and indexing) are healthy and that site performance is optimal.

I also recommend doing a periodic full technical SEO audit of your site to get a full diagnosis and uncover the deeper issues.

More Resources:

Featured Image: Kite_rin/Shutterstock

window.addEventListener( ‘load’, function() {

setTimeout(function(){ striggerEvent( ‘load2’ ); }, 2000);

});

window.addEventListener( ‘load2’, function() {

if( sopp != ‘yes’ && addtl_consent != ‘1~’ ){

!function(f,b,e,v,n,t,s)

{if(f.fbq)return;n=f.fbq=function(){n.callMethod?

n.callMethod.apply(n,arguments):n.queue.push(arguments)};

if(!f._fbq)f._fbq=n;n.push=n;n.loaded=!0;n.version=’2.0′;

n.queue=[];t=b.createElement(e);t.async=!0;

t.src=v;s=b.getElementsByTagName(e)[0];

s.parentNode.insertBefore(t,s)}(window,document,’script’,

‘https://connect.facebook.net/en_US/fbevents.js’);

if( typeof sopp !== “undefined” && sopp === ‘yes’ ){

fbq(‘dataProcessingOptions’, [‘LDU’], 1, 1000);

}else{

fbq(‘dataProcessingOptions’, []);

}

fbq(‘init’, ‘1321385257908563’);

fbq(‘track’, ‘PageView’);

fbq(‘trackSingle’, ‘1321385257908563’, ‘ViewContent’, {

content_name: ‘the-20-minute-technical-seo-workweek’,

content_category: ‘seo technical-seo’

});

}

});